Human Inspired Multi-Agent Navigation using Knowledge Distillation

Clemson University

In IEEE/RSJ International Conference on Intelligent Robots and Systems, 2021.

| Reference | Ours | RL w/o KD | ORCA |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Abstract

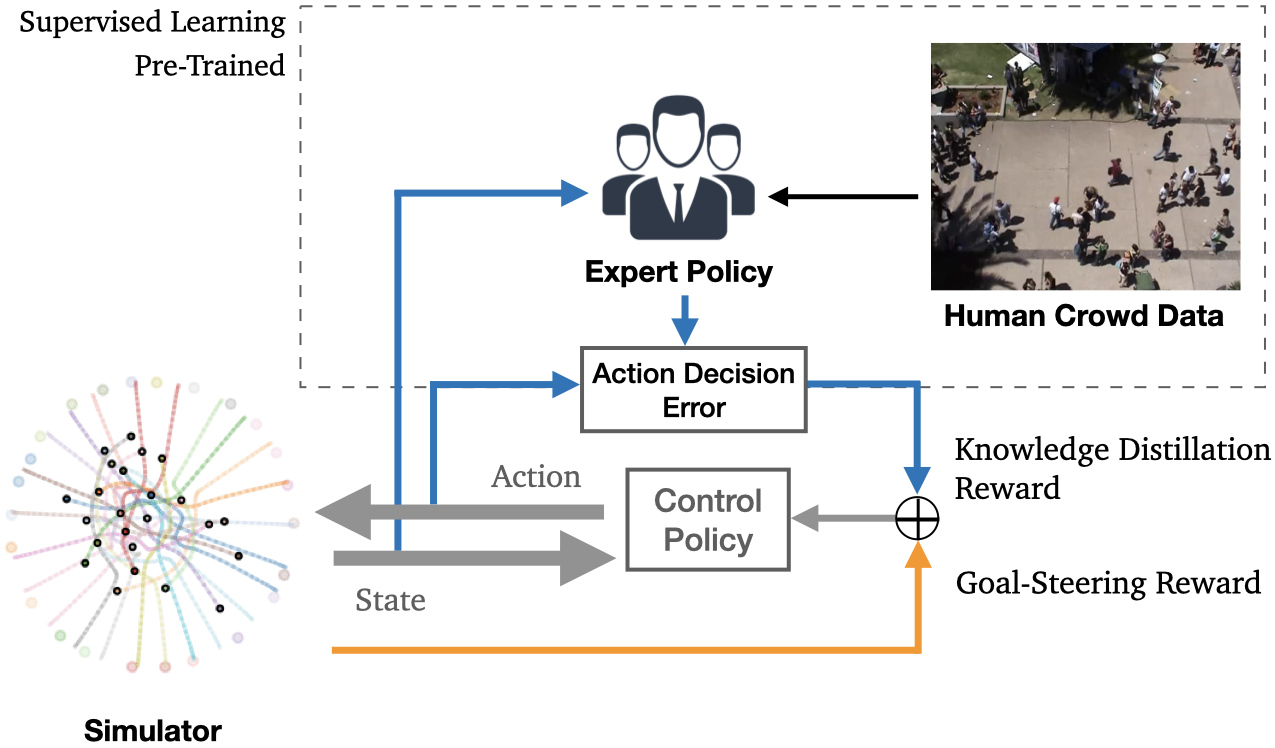

Despite significant advancements in the field of multi-agent navigation, agents still lack the sophistication and intelligence that humans exhibit in multi-agent settings. In this paper, we propose a framework for learning a human-like general collision avoidance policy for agent-agent interactions in fully decentralized, multi-agent environments. Our approach uses knowledge distillation with reinforcement learning to shape the reward function based on expert policies extracted from human trajectory demonstrations through behavior cloning. We show that agents trained with our approach can take human-like trajectories in collision avoidance and goal-directed steering tasks not provided by the demonstrations, outperforming the experts as well as learning-based agents trained without knowledge distillation.

Despite significant advancements in the field of multi-agent navigation, agents still lack the sophistication and intelligence that humans exhibit in multi-agent settings. In this paper, we propose a framework for learning a human-like general collision avoidance policy for agent-agent interactions in fully decentralized, multi-agent environments. Our approach uses knowledge distillation with reinforcement learning to shape the reward function based on expert policies extracted from human trajectory demonstrations through behavior cloning. We show that agents trained with our approach can take human-like trajectories in collision avoidance and goal-directed steering tasks not provided by the demonstrations, outperforming the experts as well as learning-based agents trained without knowledge distillation.

Video

Bibtex

@inproceedings{kdma,

author={Xu, Pei and Karamouzas, Ioannis},

booktitle={2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title={Human-Inspired Multi-Agent Navigation using Knowledge Distillation},

year={2021},

volume={},

number={},

pages={8105-8112},

doi={10.1109/IROS51168.2021.9636463}

}